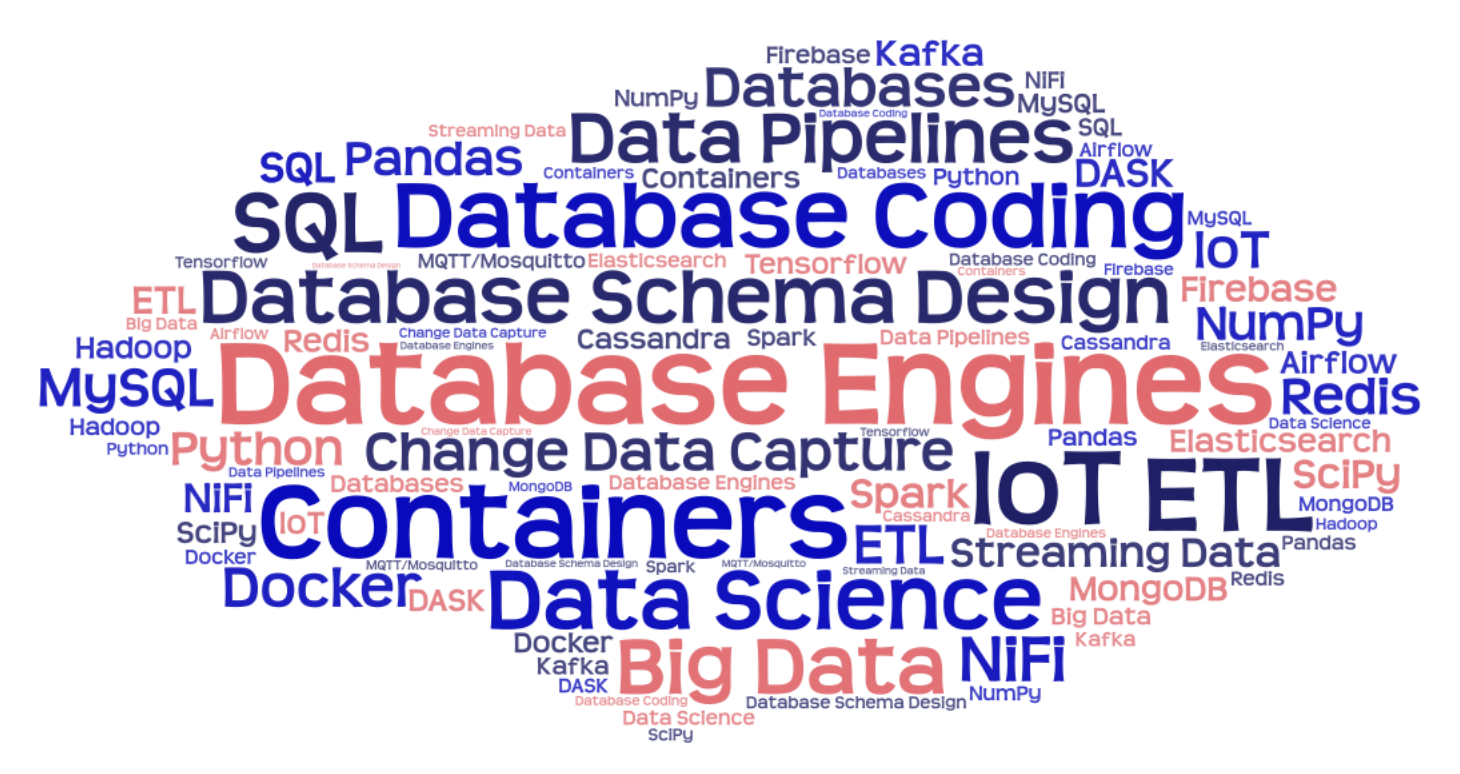

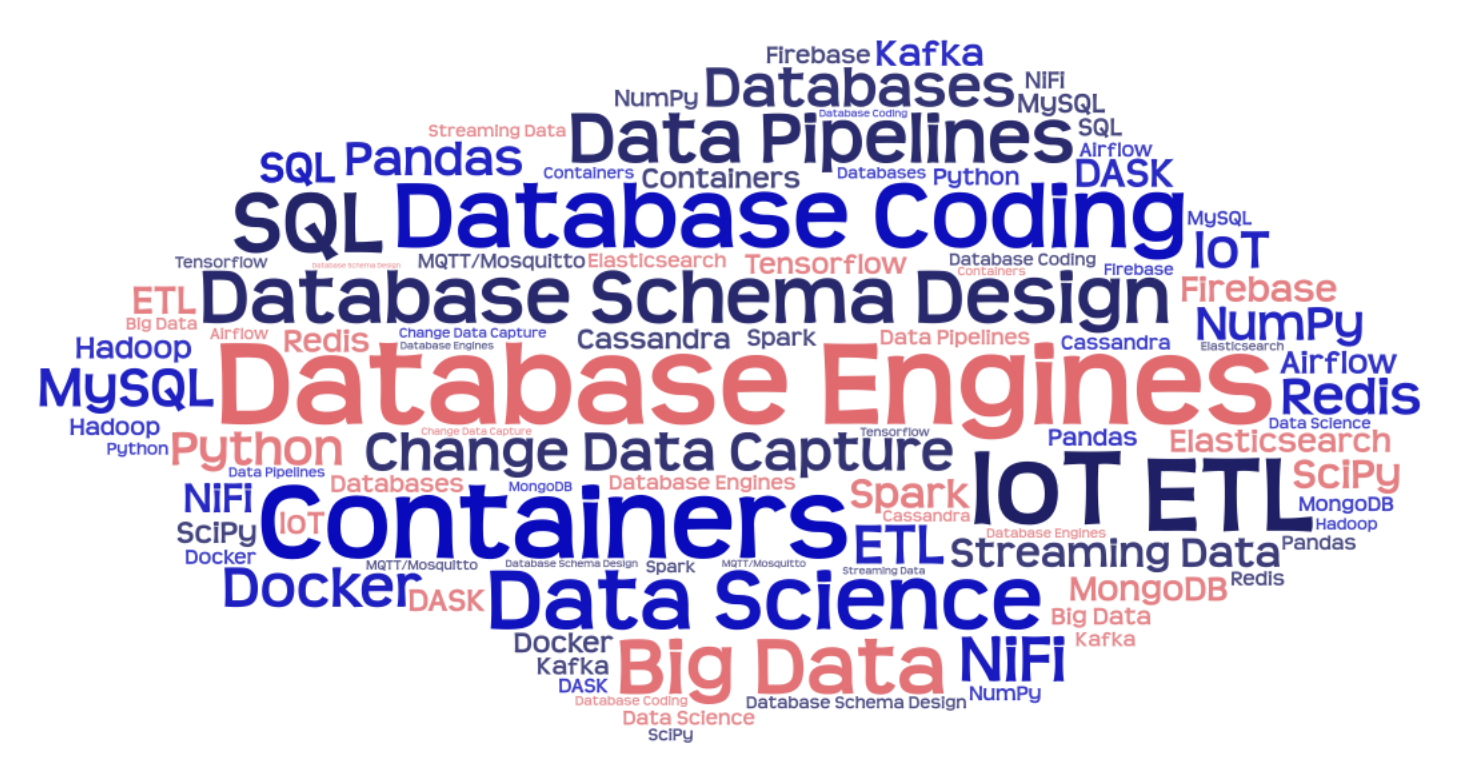

- Fast-Paced Learning of a Multitude of Technologies and Tools using Containers

- Big Data and Streaming Data using Hadoop, Spark, and Kafka

- ETL and Data Pipelines across Different Databases

- Coverage of Top 10 Most Popular Databases

- IoT and Streaming Analytics

- Advanced Data Science

You will begin with a quick learning of containers, a standard unit of software packaging used by all major cloud providers and developers’ communities. You will learn and practice how to quickly set up major database engines. Then, you will have a learning of database design, database languages, and programmatic database accesses to be able to professionally design and use different types of databases. You will then learn about big data engines and how to implement and scale big data applications. You will also learn various data engineering tools to connect all these together via data pipelines. Furthermore, you will also learn a multitude of data science jewels so that you can process multimedia data and create deeper meaning from data. Finally, you will put all these together in a capstone project consisting of multiple databases, various data pipelines, big data analytics, and more in a number of containers.

Author(s)

Professor Byunggu Yu (“Dan”) received his Ph.D. in Computer Science, specialized in data science and engineering, from Illinois Institute of Technology in 2000. He has served as a professor at various institutions including the University of Wyoming until 2006, the University of the District of Columbia since 2007, and, as a faculty appointee, at the National Institute of Standards and Technology from 2013 to 2018. He has received multiple awards from the National Science Foundation for directing research and education in databases, data science, and data engineering and for establishing a national center of academic excellence in cyber security and information assurance.

Prerequisite (minimum requirements)

Can run basic commands in a command terminal (Command Prompt, Terminal, Bash);

Can understand expressions in Probability and Statistics, Linear Algebra, and Differential Equations;

Can install Visual Studio Code and use it to edit, run, and understand simple example codes of Python without help;

Topics Outline

This program consists of 10 topic areas delivered in 25 learning modules that are taught over 14.5 weeks (including 2 break weeks) comprising 150 hours of total learning time. This is equivalent to taking 3 to 4 courses in a single semester at a university.

1. Database Engines (2 Modules, 12 Hours, 1 Week)

- Containers - Docker

- Relational databases - MySQL

- Document databases - MongoDB

- Key-Value databases - Redis

- Search engine databases - Elasticsearch

- Wide-column databases - Cassandra

- Serverless cloud databases - Firebase

2. Database Schema Design (2 Modules, 12 Hours, 1 Week)

- Data-centric Approach

- Query-centric Approach

- Schemaless Approach

3. Database Coding in SQL and Python (3 Modules, 18 Hours, 1.5 Week)

- SQL

- Python, NumPy, Pandas

- Access Drivers for Different Databases

- DASK

4. Data Science I (2 Modules, 12 Hours, 1 Week)

- Sampling and Statistics

- p-value and Null Hypothesis

- Linear Regression

- Correlation Analysis

- Time Series Analysis

5. Working with Multiple Types of Databases (3 Modules, 18 Hours, 1.5 Week)

- Periodic Change Data Capture: periodic queries

- Real-time Change Data Capture: binlog events

- ETL and Data Pipelines: Python, NiFi, Airflow, Perfect on Dask

6. Data Science II (2 Modules, 12 Hours, 1 Week)

- Singular Value Decomposition

- Principal Component Analysis

- Fourier Transform, Wavelet Transform, Laplace Transform

7. Big Data (3 Modules, 18 Hours, 1.5 Week)

- Hadoop

- Spark

- Kafka

- IoT

8. Data Science III (6 Modules, 36 Hours, 3 Week)

- K-means Clustering and KNN Classification, Bayes Theorem and Bayes Classifiers

- Confusion Matrix and Sensitivity Analysis

- Gradient Descent and Feed-Forward Neural Networks

- Recurrent Neural Networks and Long Short-Term Memory (LSTM)

- Reinforcement Learning - Q-Learning, Q-Table, and Q-Network

- Convolutional Neural Networks (CNN) and CNN-LSTM

- Attention Mechanisms in Neural Networks and Cross Validation

9. Creating Web Applications and Using GitHub (1 Module, 6 Hours, 0.5 Week)

10. Capstone Project - Advanced Research Topic (1 Module, 6 Hours, 0.5 Week)